/*hackerman.ai#<<<<<<<<<<<<<<*cdefintcurrent_char_index=0*cdefstrcurrent_char*/ /*Pythonwrapper*/staticPyObject*__pyx_pw_9hackerman_5Lexer_12comment_char_1__ge t__(PyObject*__pyx_v_self);/*proto*/staticPyObject*__pyx_pw_9hackerman_5Lexer_1 2comment_char_1__get__(PyObject*__pyx_v_self){CYTHON_UNUSEDPyObject*const*__pyx _kwvalues;PyObject*__pyx_r=0;__Pyx_RefNannyDeclarations__Pyx_RefNannySetupConte xt("__get__(wrapper)",0);__pyx_kwvalues=__Pyx_KwValues_VARARGS(__pyx_args,__pyx _nargs);__pyx_r=__pyx_pf_9hackerman_5Lexer_12comment_char___get__(((struct__pyx _obj_9hackerman_Lexer*)__pyx_v_self));/*functionexitcode*/__Pyx_RefNannyFinishC ontext();return__pyx_r;}staticPyObject*__pyx_pf_9hackerman_5Lexer_12comment_cha r___get__(CYTHON_UN USEDstruct__pyx_obj_9hackerman_Lexer*__p yx_v_self){PyObject *__pyx_r=NULL;__Pyx_RefNannyDeclarations __Pyx_RefNannySetup Context("__get__",1);/*"hackerman.pyx":2 61**@property*defco mment_char(self):return"--"#<<<<<<<<<<<< <<**deftokenize(sel f,strtext):*/__Pyx_XDECREF(__pyx_r);__Py x_INCREF(__pyx_kp_u _*/__Pyx_XDECREF(__pyx_r);__Pyx_INCREF(_ _pyx_kp_u_*/__Pyx_X DEC260*deflexer_name(self):return"Hacker manConfig"**@prlexe r_name(self):return"HackermanConfig"**@p rlexer_name(self):r eturn"HackermanConfig"**@prlexer_name(se lf):retu_rlexer_nam e(self):return"HackermanConfig"**@prlexe r_name(self):return "HackermanConfig"**@prlexer_name(self):e ftokenize(self,strt ext):#<<<<<<<<<<<<<<*cdefintcurrent_char _index=0*cdefstrcur rent_char*//*Pythonwrapper*/current_char _index=0*cdefstrcur rent_char*//*Pythonwrapper*/current_char _index=0*cdefstrcur rent_char*//*Px_args,Py_ssize_t__pyx_nar gs,PyObject*__pyx_k wds#elsePyObject*__pyx_args,PyObject*__p yx_kwds#endif);/*pr oto*/staticPyMethodDef__pyx_mdef_9hacker man_5Lexer_1tokeniz e={"tokenize",(PyCFunction)(void*)(__Pyx _PyCFunctio={"token ize",(PyCFunction)(void*)(__Pyx_PyCFunct io={"tokenize",(PyC Function)(void*)(__Pyx_PyCFunctio={"toke nize",(Py_pw_9hacke rman_5Lexer_1tokenize(PyObject*__pyx_v_s elf,#ifCYTHON_METH_ FASTCALLPyObject*const*__pyx_args,Py_ssi ze_t__pyx_nargs,PyO bject*__pyx_kwds#elsePyObject*__pyx_args ,PyObject*__pyx_kwd s#endif){PyObject*__pyx_v_text=0;#if!CYT HON_METH_FASTCALLCY THON_UNUSEDPy_ssize_t__pyx_nargs;#endifC YTHON_UNUSEDPyObjec t*const*__pyx_kwvalues;PyObject*values[1 ]={0};int__pyx_line no=0;constchar*__pyx_filename=NULL;int__ pyx_clineno=0;PyObj ect*__pyx_r=0;__Pyx_RefNannyDeclarations __Pyx_RefNannySetupContext("tokenize(wrapper)",0);#if!CYTHON_METH_FASTCALL#ifCY THON_ASSUME_SAFE_MACROS__pyx_nargs=PyTuple_GET_SIZE(__pyx_args);#else__pyx_narg s=PyTuple_Size(__pyx_args);if(unliPyTuple_Size(__pyx_args);if(unliPyTuple_Size( __pyx_args);if(unliPyTuple_Size(__pyx_args);if_args,__pyx_nargs);{PyObject**__p yx_pyargnames[]={&__pyx_n_s_text,0};if(__pyx_kwds){Py_ssize_tkw_args;switch(__p yx_nargs){case1:values[0]=__Pyx_Arg_FASTCALL(__pyx_args,0);CYTHON_FALLTHROUGH;c ase0:break;default:goto__pyx_L5_argtuple_e__pyx_L5_argtuple_e__pyx_L5_argtuple_ e__pyx_L5_argtuple_e__pyx_L5_argtuple_e__pyx_L5_argtuple_e__pyx_L5_argtuple_e__ pyly((v__pyx_L5_argtuple_e__pyx_L5_argtuple_e__pyx_L5_argtuple_e__pyx_L5_argtup

Buy once, own forever

Get notified FeaturesFree to use for personal or evaluation purposes. Commercial use requires a license.

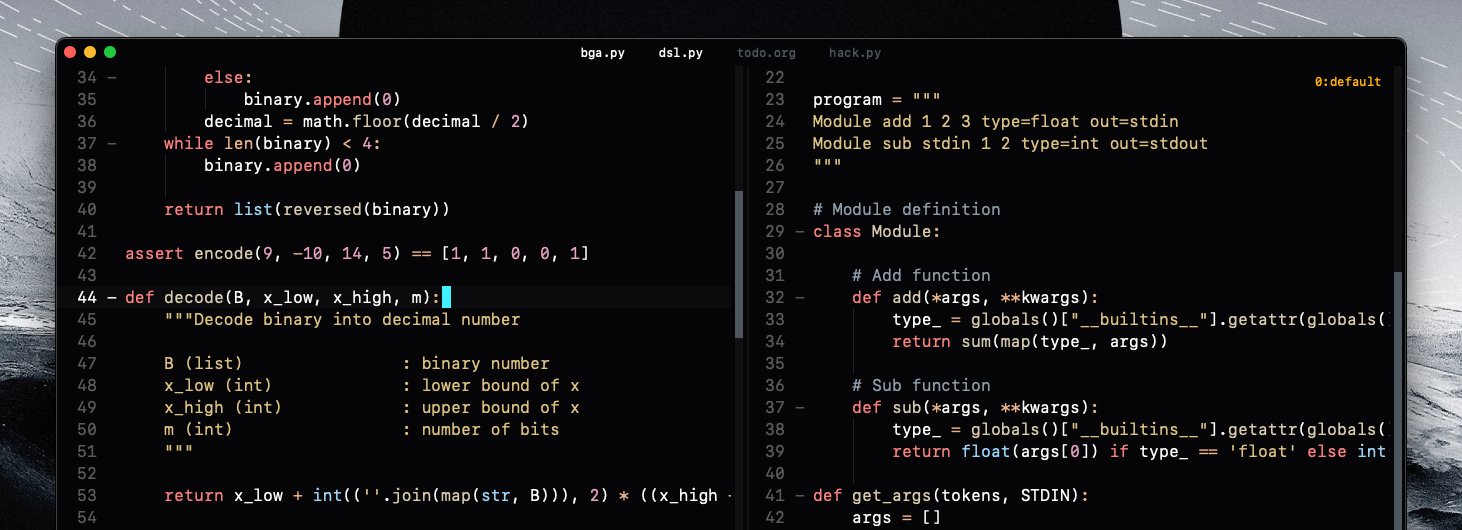

Hackerman Text is built on a simple principle: make typing feel instant, then stay out of your way.

Non-essential features are on-demand by default. There are no distracting squiggles.

Most things behave as you'd expect, no learning curve.

Focus on a single file, or single file in split views, or view multiple files in the same window. Every view stays in sync and always shows the latest version of your files.

AI is local-first and built-in, ready to assist inline in any file. By default, the file is the context, edit the file to change the context.

Your configuration lives in plain text, so it can be version-controlled and shared across machines.

Free without limits for non-commercial purposes.

Local-first AI. Zero-latency typing. No telemetry, no sign in.

Try for freeMinimal and fast

Built-in, native-level lexers. A minimal always-visible UI keeps the focus on code.

Our goal is to keep a near-zero typing latency in any language.

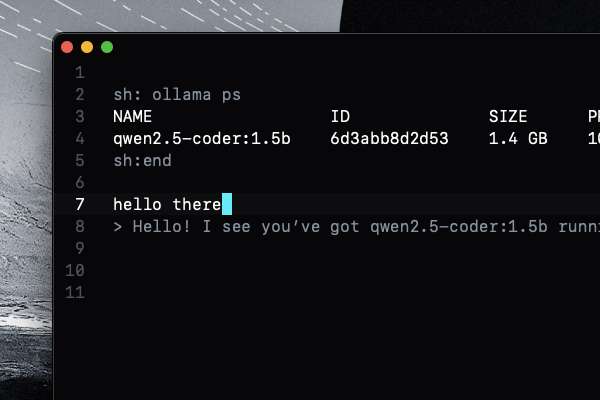

Inline AI chat

The file is the context, and current line is the prompt. Just hit ⌘⇧⏎ to start the conversation.

Plain text chats can be saved to disk, version controlled, and opened later in any editor.

Local model support via Ollama, plus out-of-the-box integration with OpenAI and Mistral, with more providers planned over time.

Copilot-like code completion

Fast completions using local models. Just start writing and hit ⌘⇧⏎ to complete line.

This works best with FIM models, run local models via Ollama. By default, both code completion and inline AI chat runs via command-chat-complete ⌘⇧⏎.

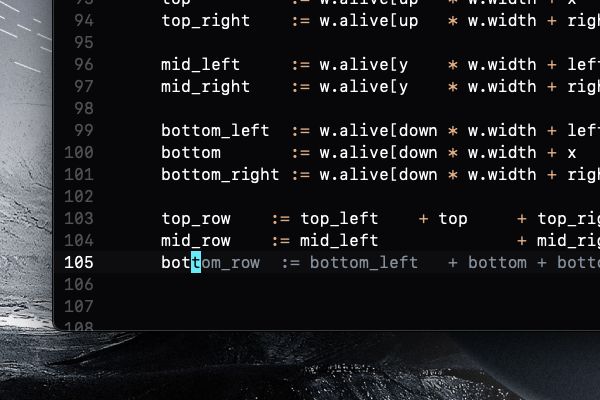

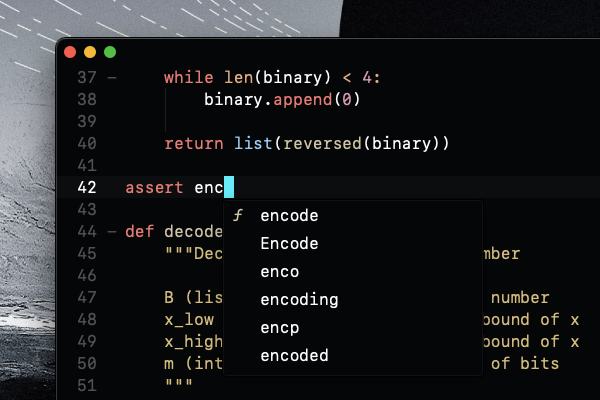

Context-aware autocomplete

Autocomplete stays fast by indexing the current file on load and updating as you type.

Suggestions come only from your document and are ranked so the most likely match appears first. Hit ⌘⏎ to select first suggestion in list or arrow down ↓ to navigate list.

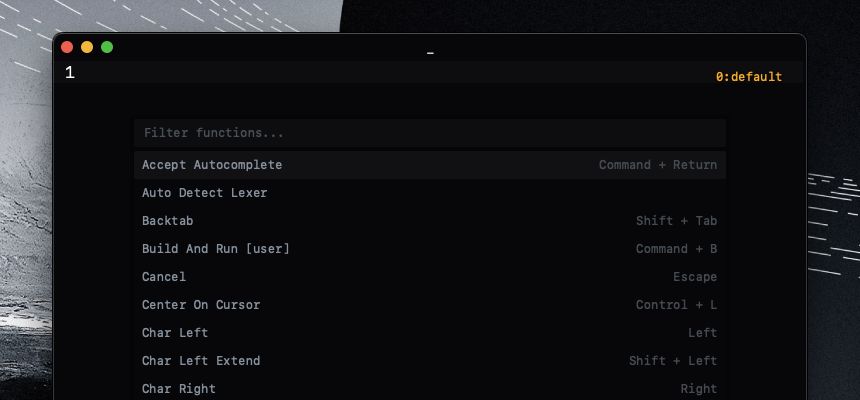

Intuitive key bindings

Platform-default shortcuts so no learning curve. Everything is a function and bindable.

View all key bindings from function explorer ⌘⇧P. Most editor actions have alternative bindings based on Emacs.

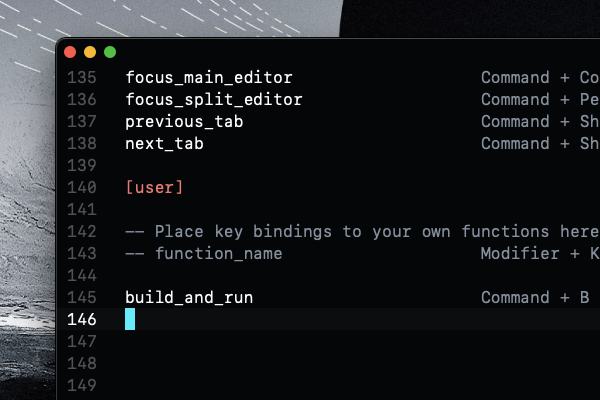

Custom bindable functions

Write your own editor commands in a small, well-defined subset of Python.

Open .hackerman-scripts.py from function explorer ⌘⇧P (Open Scripts File). Functions hot-reload, show up in the function explorer, and can be bound to any key combination, so your custom workflows can feel like built-in features.

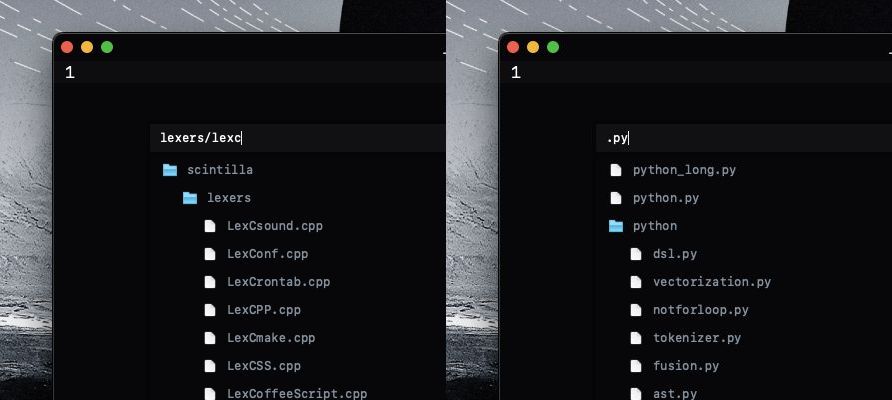

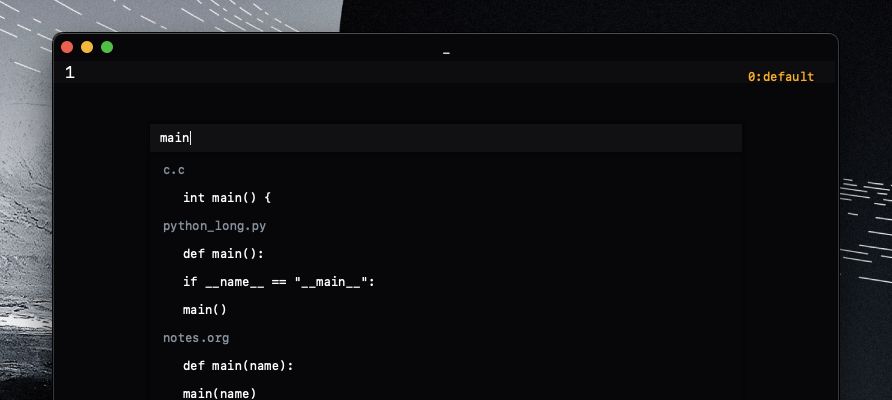

Smart file finder

Filter the file explorer ⌘E to find files instantly.

For example: lexers/lexc for all files starting with lexc in directory lexers, or .py for all Python files.

Project-wide search

Use search explorer ⌘⇧F to scan every file in the project for a pattern.

Press enter ⏎ in search field to export the results as plain text to save, share, or work from.

A high-level overview of work in progress and direction of the editor. This roadmap will evolve as priorities shift, new ideas emerge, and based on user feedback.

Language support

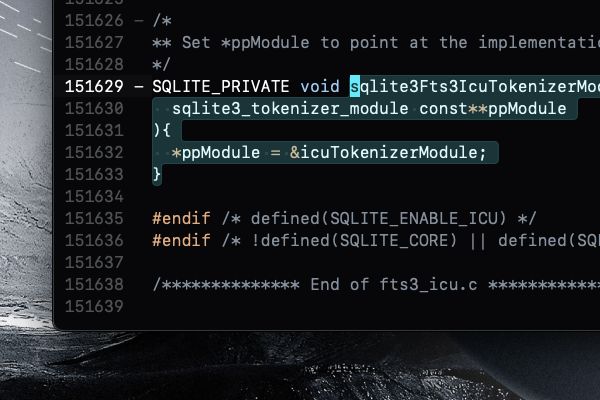

Minimal language support by design. The built-in lexers are hand-tuned for speed and precise syntax highlighting, with language-specific code folding.

Assembly

Bash

C

C#

CSS

Cython

D

Dart

Fortran

Go

Haskell

HTML

JavaScript

Kotlin

LaTeX

Lisp

Lua

Makefile

Markdown

Mojo

Nim

OCaml

Odin

Pascal

Perl

PHP

PowerShell

Prolog

Python

R

Ruby

Rust

Scala

Swift

TypeScript

Verilog

Zig

Jai

Turn your late-night ideas into morning commits.

Try for freeWe're 100% user-funded

Free without limits for non-commercial purposes. Buy a license for commercial use and to support continued independent development.

Personal Early bird

$195 $325

$3.25 $5.42 per month over 5 years

Personal license for commercial use

Single user, unlimited machines

One-time payment, no expiry

This is an early bird offer for a limited time.

Teams

$595 /year

$4.96 per month, per user

Commercial license for multiple users

Up to 10 users per license (stackable), unlimited machines per user

One-year expiry

For teams who pay to reduce dev friction.

Business

$5995 /year

$3.33 per month, per user for 150 seats

Commercial license for unlimited users

Unlimited users, unlimited machines per user

One-year expiry

Get started with Hackerman Text

Download